On July 8, 2025, the research findings of Professor Yang Kehu’s team were officially published online in Scientific Data, a Nature journal. The paper is titled “MUSeg: A multimodal semantic segmentation dataset for complex underground mine scenes.” The first author of the paper is Li Shiyan, a 2022 PhD student from the school, and the work was completed under the joint supervision of Professor Yang Kehu and Teacher Kong Qingqun.

Visual perception is a core technology for achieving intelligent mining. However, underground mine environments are notably complex and unique, featuring severe or even extremely low light variations, narrow and crowded spatial structures, and a wide variety of objects. Semantic segmentation techniques relying solely on visible light face challenges such as insufficient accuracy and poor robustness in mine applications, severely limiting the reliability of visual perception systems in these settings. Multimodal fusion technology offers a potential solution, but the lack of high-quality multimodal datasets for mine scenes has been a key bottleneck hindering application in this field.

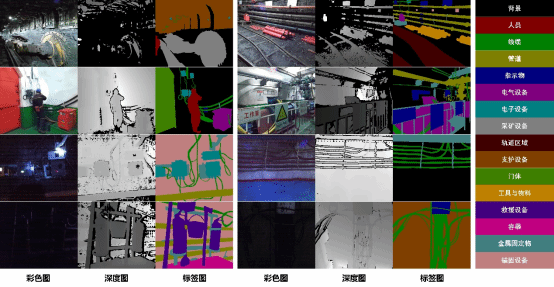

To address this issue, Professor Yang Kehu’s team constructed MUSeg, a multimodal semantic segmentation dataset for complex underground mine scenes. This dataset covers six underground mines of varying sizes from different regions in China, including one training gold mine and five production mines with diverse geological conditions and operational histories. The dataset contains 3,171 RGB-D data pairs collected from 1,916 independent locations and defines 15 typical object semantic categories in the mine environment: Person, Cable, Pipe, Indicator, Metal Fixture, Container, Tool & Material, Door, Electrical Equipment, Electronic Equipment, Mining Equipment, Anchoring Equipment, Support Equipment, Rescue Equipment, and Rail Area.

Fig. 1: Annotation examples from the multimodal semantic segmentation dataset for complex underground mine scenes.

MUSeg fills the gap of high-quality datasets in this field, providing strong data support for visual perception technology in the construction of intelligent mines. It holds positive significance for promoting the safe, efficient, and intelligent development of the coal industry.

Citation: Li, S., Kong, Q., Gao, X., Shi, F., Li, L., Zhang, Q., Wang, P., & Yang, K. MUSeg: A multimodal semantic segmentation dataset for complex underground mine scenes. Sci Data 12, 1160 (2025).

Download the paper: https://link.springer.com/article/10.1038/s41597-025-05493-9

Dataset: https://doi.org/10.6084/m9.figshare.28749098.v2